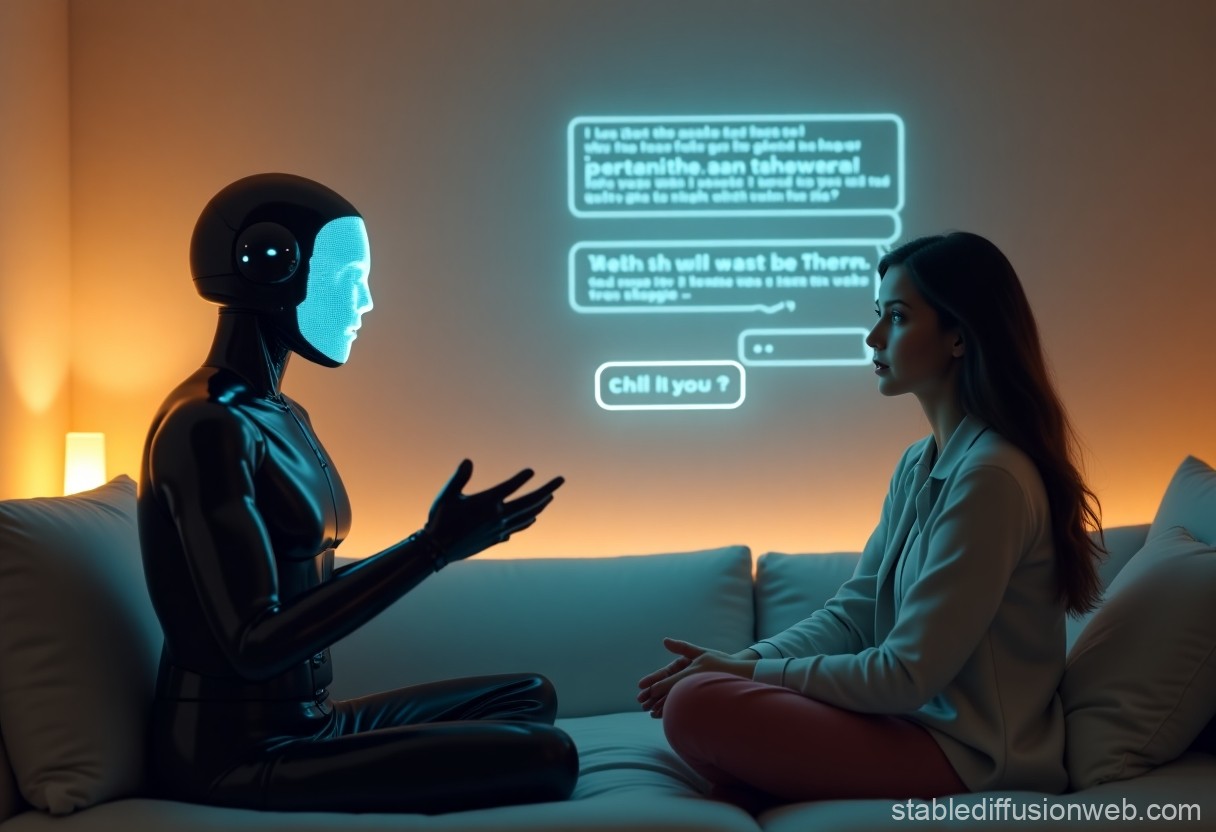

People are using ChatGPT as a makeshift therapist—cheap, always available, and judgment-free. But experts warn it’s no replacement for human empathy and could even give harmful advice. 😉

Why? It’s accessible, anonymous, and some find it helpful for journaling or CBT exercises. Studies show short-term benefits for anxiety and depression.

But… AI lacks real emotional intelligence, might reinforce negative thoughts, and privacy? Questionable at best.

Verdict: Use it as a supplement, not a substitute. And maybe don’t share your deepest secrets with a chatbot.